Abstract

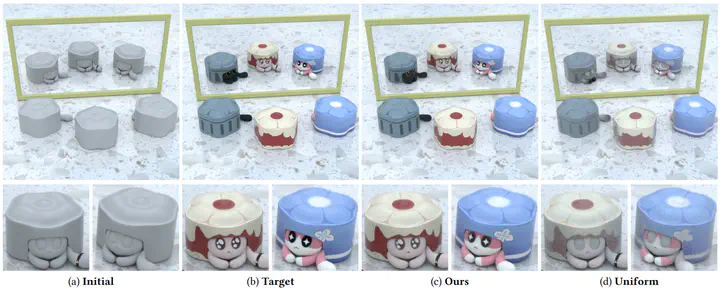

Inverse rendering is crucial for many scientific and engineering disciplines. Recent progress in differentiable rendering has led to efficient differentiation of the full image formation process with respect to scene parameters, enabling gradient-based optimization.However, computational demands pose a significant challenge for differentiable rendering, particularly when rendering all pixels during inverse rendering from high-resolution/multi-view images. This computational cost leads to slow performance in each iteration of inverse rendering. Meanwhile, naively reducing the sampling budget by uniformly sampling pixels to render in each iteration can result in high gradient variance during inverse rendering, ultimately degrading overall performance.Our goal is to accelerate inverse rendering by reducing the sampling budget without sacrificing overall performance. In this paper, we introduce a novel image-space adaptive sampling framework to accelerate inverse rendering by dynamically adjusting pixel sampling probabilities based on gradient variance and contribution to the loss function. Our approach efficiently handles high-resolution images and complex scenes, with faster convergence and improved performance compared to uniform sampling, making it a robust solution for efficient inverse rendering.