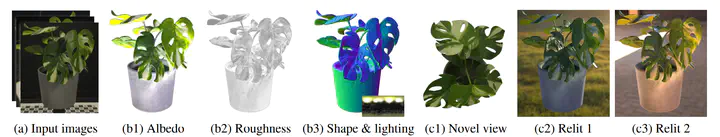

Neural-PBIR Reconstruction of Shape, Material, and Illumination

Cheng Sun, Guangyan Cai, Zhengqin Li, Kai Yan, Cheng Zhang, Carl Marshall, Jia-Bin Huang, Shuang Zhao, Zhao Dong

October, 2023

Abstract

Reconstructing the shape and spatially varying surface appearances of a physical-world object as well as its surrounding illumination based on 2D images (e.g., photographs) of the object has been a long-standing problem in computer vision and graphics. In this paper, we introduce a robust object reconstruction pipeline combining neural based object reconstruction and physics-based inverse rendering (PBIR). Specifically, our pipeline firstly leverages a neural stage to produce high-quality but potentially imperfect predictions of object shape, reflectance, and illumination. Then, in the later stage, initialized by the neural predictions, we perform PBIR to refine the initial results and obtain the final high-quality reconstruction. Experimental results demonstrate our pipeline significantly outperforms existing reconstruction methods quality-wise and performance-wise.

Publication

Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)

Cheng Sun

Research Scientist at Nvidia

Research Engineer

I am interested in physics-based differentiable rendering and its applications, such as inverse rendering.

Zhengqin Li

Research Scientist at Meta Reality Lab

Kai Yan

Ph.D. Candidate in Computer Science at UC Irvine

Cheng Zhang

Research Scientist at Meta Reality Labs

Carl Marshall

Research Scientist at Meta Reality Labs

Jia-Bin Huang

Associate Professor of Computer Science at University of Maryland, College Park

Shuang Zhao

Associate Professor of Computing and Data Science at the University of Illinois Urbana-Champaign

Zhao Dong

Senior Research Lead & Manager at Meta Reality Lab Research