Biography

My name is Guangyan Cai (蔡广彦), and I am a research engineer at SceniX, where I develop simulation engines for robotic learning systems.

I earned my Ph.D. in Computer Science from the University of California, Irvine, School of Information and Computer Sciences, under the supervision of Prof. Shuang Zhao. My doctoral research focused on physics-based differentiable rendering and its applications, including inverse rendering.

Previously, I received my B.S. in Computer Science from the University of California, San Diego, where I worked with Prof. Ravi Ramamoorthi.

Ph.D. in Computer Science, 2020 - 2025

University of California, Irvine

B.S. in Computer Science, 2016 - 2020

University of California, San Diego

Featured Publications

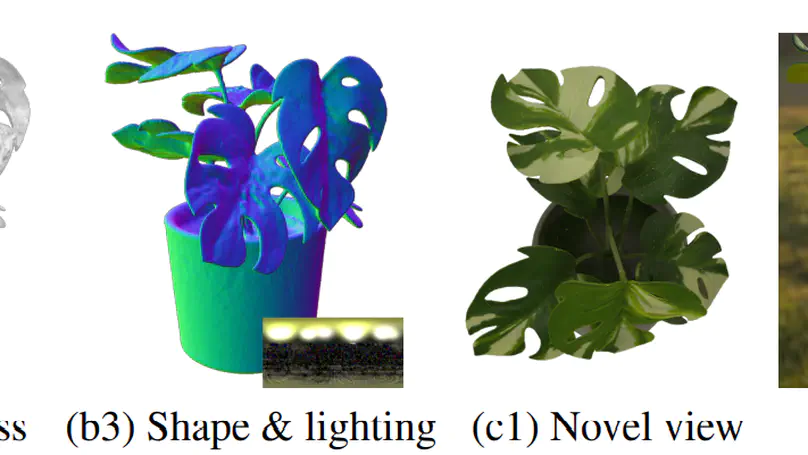

Reconstructing the shape and spatially varying surface appearances of a physical-world object as well as its surrounding illumination based on 2D images (e.g., photographs) of the object has been a long-standing problem in computer vision and graphics. In this paper, we introduce a robust object reconstruction pipeline combining neural based object reconstruction and physics-based inverse rendering (PBIR). Specifically, our pipeline firstly leverages a neural stage to produce high-quality but potentially imperfect predictions of object shape, reflectance, and illumination. Then, in the later stage, initialized by the neural predictions, we perform PBIR to refine the initial results and obtain the final high-quality reconstruction. Experimental results demonstrate our pipeline significantly outperforms existing reconstruction methods quality-wise and performance-wise.

Mathematically representing the shape of an object is a key ingredient for solving inverse rendering problems. Explicit representations like meshes are efficient to render in a differentiable fashion but have difficulties handling topology changes. Implicit representations like signed-distance functions, on the other hand, offer better support of topology changes but are much more difficult to use for physics-based differentiable rendering. We introduce a new physics-based inverse rendering pipeline that uses both implicit and explicit representations. Our technique enjoys the benefit of both representations by supporting both topology changes and differentiable rendering of complex effects such as environmental illumination, soft shadows, and interreflection. We demonstrate the effectiveness of our technique using several synthetic and real examples.

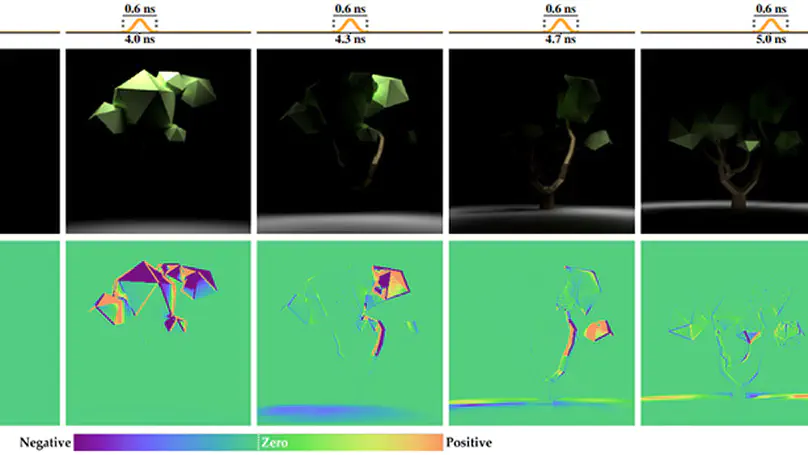

The continued advancements of time-of-flight imaging devices have enabled new imaging pipelines with numerous applications. Consequently, several forward rendering techniques capable of accurately and efficiently simulating these devices have been introduced. However, general-purpose differentiable rendering techniques that estimate derivatives of time-of-flight images are still lacking. In this paper, we introduce a new theory of differentiable time-gated rendering that enjoys the generality of differentiating with respect to arbitrary scene parameters. Our theory also allows the design of advanced Monte Carlo estimators capable of handling cameras with near-delta or discontinuous time gates.We validate our theory by comparing derivatives generated with our technique and finite differences. Further, we demonstrate the usefulness of our technique using a few proof-of-concept inverse-rendering examples that simulate several time-of-flight imaging scenarios.

Work Experience

Research-related

- Developing simulation engines for robotic learning systems.

- Investigated the baking artifacts in material reconstruction with inverse rendering and proposed a method to mitigate them.

- Participated in building a hybrid pipeline that combines NeRF and physics-based differentiable rendering to do high quality 3D reconstruction.

- Showcased our reconstruction results at Meta Connect 2022 (starting at 1:13:20).

- Published our work at ICCV 2023 (link).

- Developed a novel, cost-effective lighting representation called Envmap++ for accurate reconstruction of glossy objects in indoor environments.

- Conducted research on improving the fidelity of glossy object reconstruction under complex indoor illumination conditions.

- Sumitted to arXiv (link)